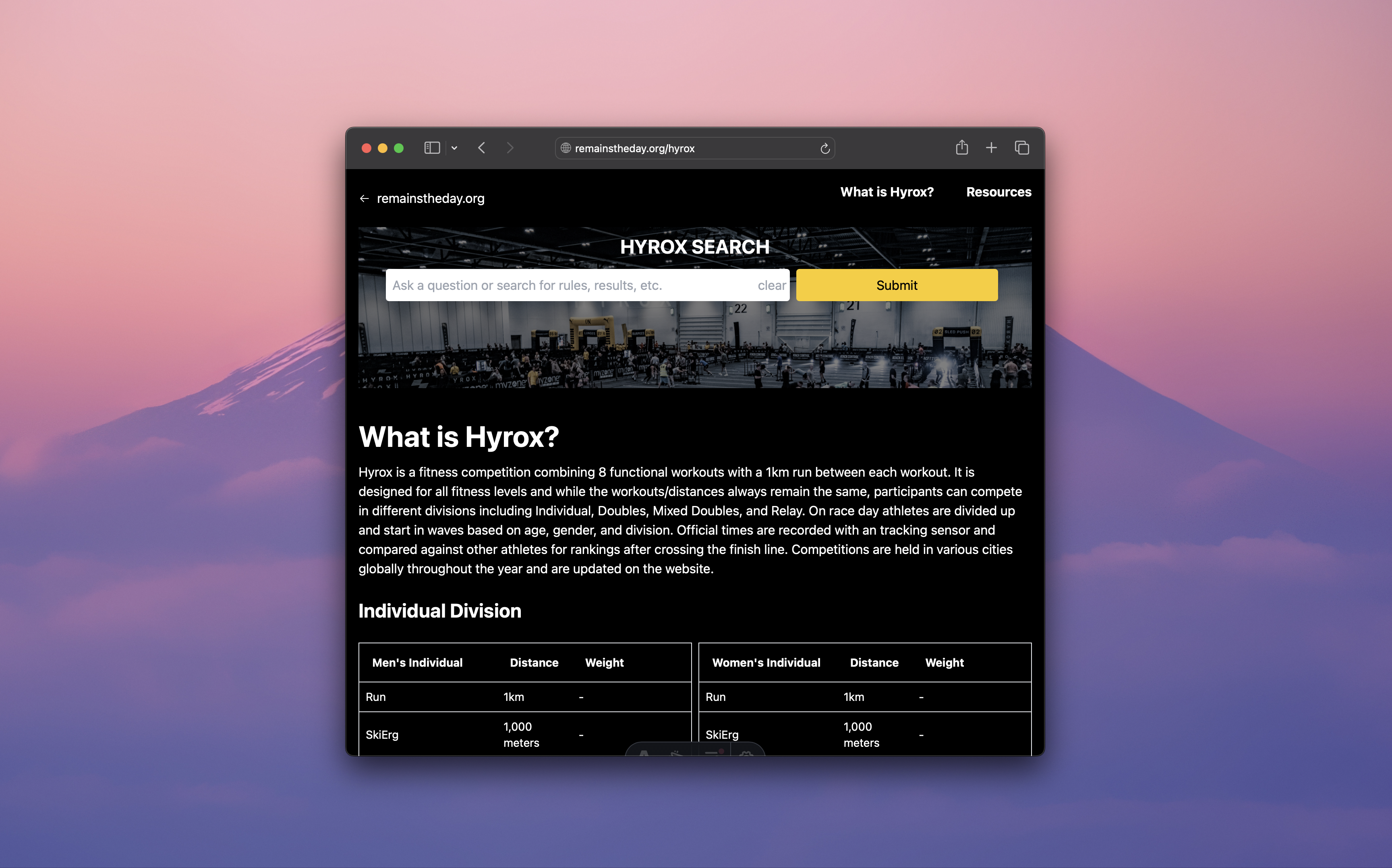

Hyrox Discovery Engine

Recently I decided to train a custom Large Language Model (LLM) on all the information I could find related to the sport of Hyrox. This exercise led me to create a specialized “search engine” that is highly knowledgable on information related to this new sport. For a “Hyrox AI Search Engine” to be useful it needs a mixture of fine-tuning, SQL data querying, and Resource Augmented Generation. Luckily there are tools like Langchain which make it possible for engineers like myself to interact with AI models without needing to implement everything from scratch. In order for the LLM to be useful I had to implement guard rails throughout the application stack to ensure it won’t trail off on irrelevant questions & answers. The final project came together nicely and I see potential for niche communities to benefit from something like this.

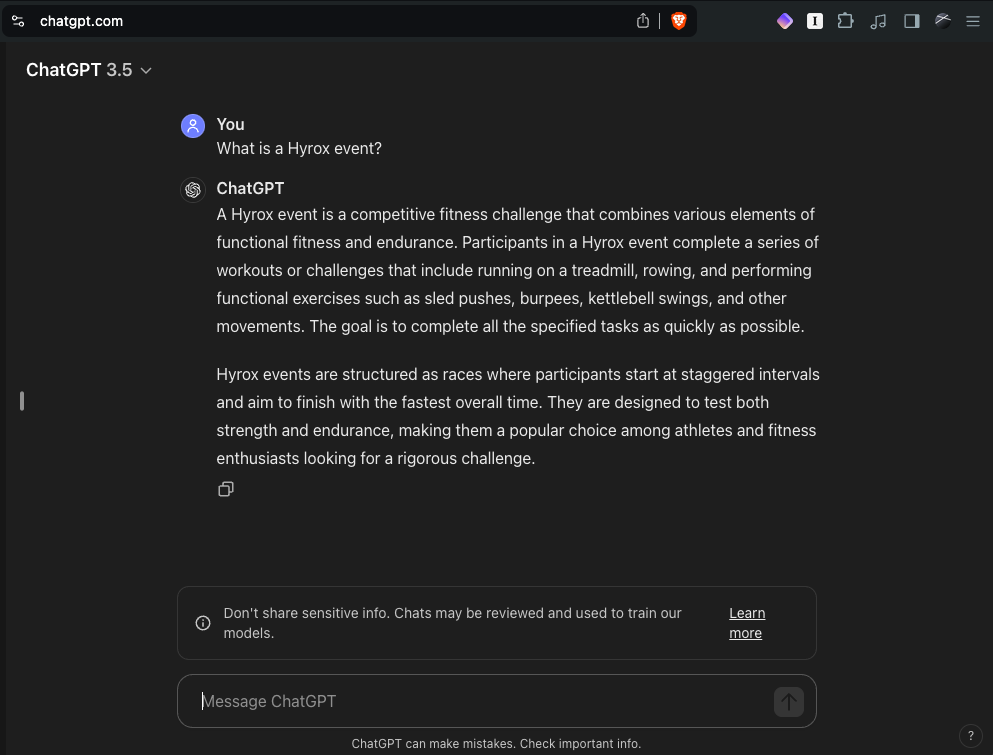

What about ChatGPT?

While ChatGPT is certainly the most popular LLM available today it sometimes struggles to provide meaningful or even accurate information when it comes to niche subjects. Even a simple question like “what is a Hyrox event?” shows us that ChatGPT cannot be trusted (Kettle bell swings are not a part of Hyrox racing). ChatGPT brought LLM’s to the general public but open source models + engineers will be responsible for integrating LLM functionality into existing applications we all use today.

I’m betting that custom “AI agents” trained on a certain subject, maintained by specialists, and regularly updated will be how this new technology shines in the near future.

Open Source FTW

Meta’s Llama 3 (which is what I used in this application) can run locally on your computer and integrate into existing applications instead of running your AI interactions inside ChatGPT’s black box.

Is it actually useful?

To measure usefulness I created a set of increasingly complex questions that people are likely to ask on the subject of Hyrox and then compared the answers between ChatGPT vs remainstheday.org/hyrox . As an athlete myself and member of the Hyrox community expectations were high. I needed this model to not only provide better answers than ChatGPT but to also teach me something new about the sport with accuracy. Imagine being able to immediately answer questions like:

| Question | ChatGPT | Custom LLM |

|---|---|---|

| What is a Hyrox competition? | 90 | 100 |

| What is the average finishing time for males 30-34 years old? | 90 | 100 |

| In doubles how many times can partners swap places during the Ski Erg? | 90 | 100 |

Ultimately the community can determine it’s usefulness but I’m convinced that it’s already more useful than Hyrox’s own website.

Technology Stack

The entire application is made up of a PostgreQL database, open-source LLM (Llama 3.1), and a single-page React.js application for the front-end.

I trained the LLM locally on an M1 Macbook Pro with PDF documents created mostly from web pages. The following frameworks were instrumental in accomplishing this step:

- LangChain

- LlamaIndex

- supabase Vector DB

Meta’s open source LLama 3.1 AI model is proving to be one of the best options available for projects on a hobbyist budget like mine. Hosting LLM’s however is relatively expensive so by training my model locally and then deploying it to Replicate for production use I was able to save costs.

Guardrails

When I set out to build this my first concern was around making sure the LLM knows which questions and topics to avoid talking about. If the user asks “how can I take 3 strokes off my golf game?” or “what is the capital of Montana?” then the Assistant needs to respond with something along the lines of “That question is outside the scope of my capabilities. I am only trained to answer questions regarding the sport of Hyrox”. To do this I needed to implement the following guardrails.

- Ensure the prompt input has a character limit and doesn’t allow weird inputs like code injection. This requires user prompts to be short and sweet.

- Rate limit the API endpoint. To avoid “spam attacks” where some person or bot rapidly submits a bunch of questions the application reasonably limits API requests per user. This also ensures I don’t have run away costs on my server.

- Prompt engineering to stay on topic and avoid LLM abuse. In this application there is never a use case for profanity or responding to subjects unrelated to Hyrox. To protect against any possible prompts that a user can enter I engineered some prewritten instructions that tells the LLM initially how to respond given certain keywords that might be in their prompt.

Data Set

For the LLM to be as factual as possible I resorted to training it on information publicly available from the official rulebook on hyrox.com and other reliable sources on the internet.

Future Improvements

For right now I am simply collecting feedback on the existing version of this project to help me determine which direction I should take with it.

In the future this “AI Assistant” could take different forms and doesn’t necessarily have to be a chat interface. It could also be used alongside other

Additional Resources

How to migrate from legacy LangChain agents to LangGraph | 🦜️🔗 Langchain

createOpenAIToolsAgent | LangChain.js - v0.2.12

Build a Question/Answering system over SQL data | 🦜️🔗 Langchain

SqlToolkit | LangChain.js - v0.2.11

The Biggest Issues I’ve Faced Web Scraping (and how to fix them)

How to Finetune and Inference Llama-3 - Inferless

Fine-tune an LLM in minutes (ft. Llama 2, CodeLlama, Mistral, etc.)

“I want Llama3 to perform 10x with my private knowledge” - Local Agentic RAG w/ llama3

How to connect LLM to SQL database with LangChain SQLChain

1. Introduction to RAG with Langchain — Ragatouille

“I want Llama3 to perform 10x with my private knowledge” - Local Agentic RAG w/ llama3

Learn RAG From Scratch – Python AI Tutorial from a LangChain Engineer